We’re officially living in the future. Voice-activated apps, once the stuff of sci-fi fantasies, are now part of everyday life. From setting alarms with a whisper to managing your smart home without lifting a finger, voice technology has transformed how we interact with our digital environments. The rise of smart devices and the seamless integration of voice-activated functionalities are not just trends—they represent a major shift in user expectations and human-computer interaction.

Let’s explore this transformation, how it came to be, what it means for businesses and consumers, and where it’s headed next.

Voice-activated apps allow users to interact with devices through speech. These applications use complex algorithms and voice recognition technology to interpret what you say and act accordingly. The goal? Replace physical interaction—touch, type, swipe—with something more natural: your voice.

Instead of manually navigating through menus or typing in queries, you simply speak your command. The app recognizes your speech, processes the intent behind it, and performs the task. It’s fast, intuitive, and increasingly accurate.

The reality is that millions of people use these services daily—many without realizing just how complex the underlying technology is.

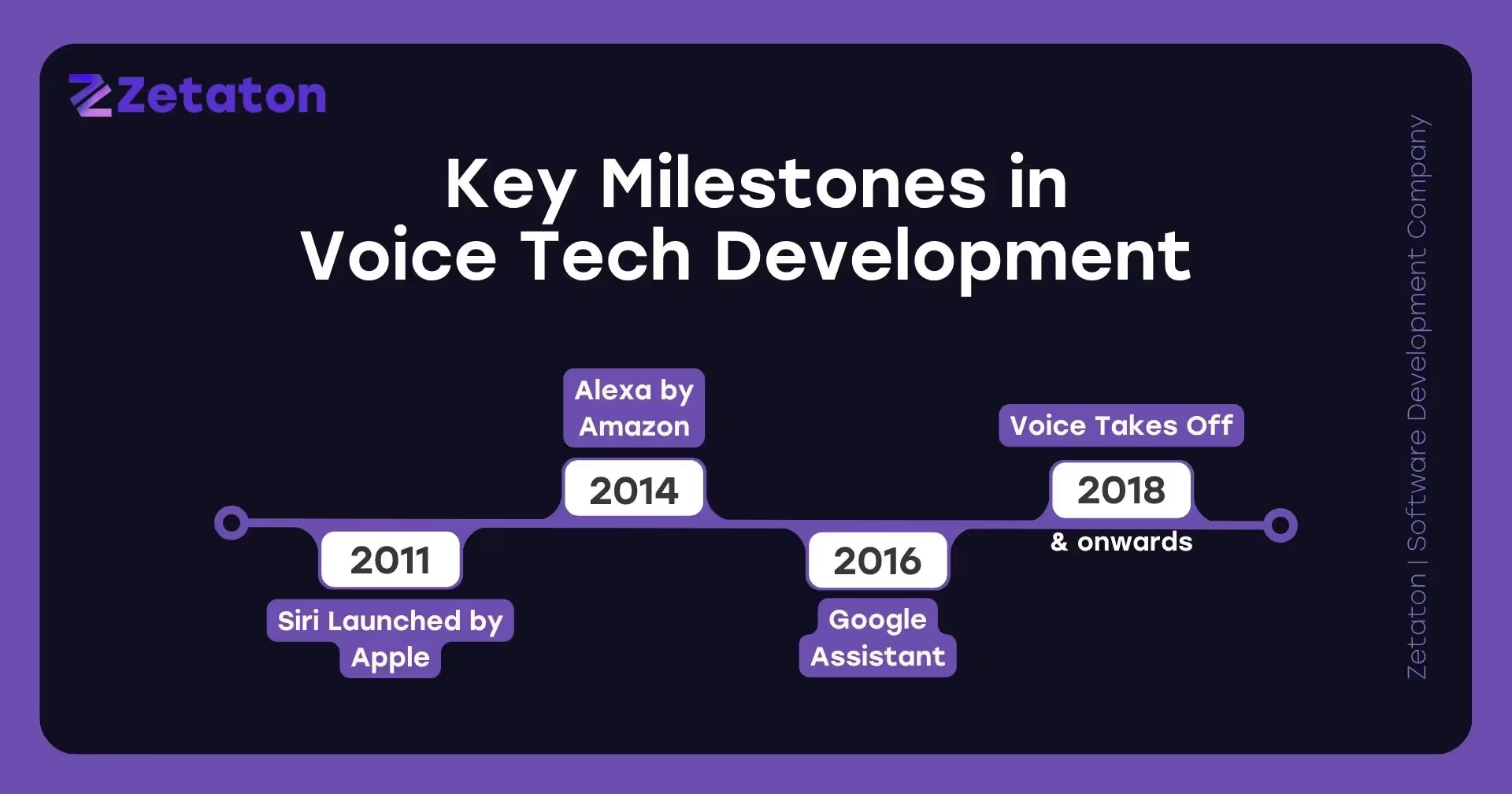

Voice technology has come a long way. In the early days, systems required exact phrasing, often struggling with accents or background noise. Errors were frequent, and usage was limited to basic tasks like dialing contacts or searching simple terms.

Fast forward to today: voice apps can interpret nuanced language, understand follow-up questions, and even detect emotional tones. The improvements in Natural Language Processing (NLP) and machine learning mean that talking to your device feels more like chatting with a human than commanding a robot.

These innovations weren't just about new features—they laid the foundation for voice as a primary user interface.

Let’s face it—humans are wired for efficiency. Speaking is faster than typing, especially when you're multitasking. Whether you're driving, cooking, or holding a baby, voice control frees up your hands and attention. It’s not just convenient—it’s becoming essential.

And it’s not just for individuals. Businesses benefit too. Customer service, for example, is increasingly handled through voice-based AI chatbots, speeding up interactions and reducing strain on human reps.

Voice tech isn’t just about ease; it’s about inclusivity. For people with physical disabilities, vision impairments, or age-related limitations, voice commands offer a level of independence that traditional interfaces often can't match. Tasks that were once difficult—sending a text, checking the weather, controlling a thermostat—are now achievable with a few words.

This democratization of technology through voice is a crucial part of its growth.

Imagine writing an email, setting a calendar event, and playing your favorite Spotify playlist—all without stopping what you’re doing. Voice tech enables multitasking on a whole new level. It’s like having a virtual personal assistant by your side 24/7, ready to execute your commands the moment they’re spoken.

Smart speakers like Amazon Echo, Google Nest, and Apple HomePod are essentially hubs for voice interaction. They're designed to be passive listeners—waiting to be activated with a wake word (e.g., "Hey Google") and then ready to carry out commands instantly.

These devices can:

Adding screens to these speakers—smart displays—amplifies the experience by combining visual and voice-based feedback.

Voice tech on mobile devices is nothing new, but it's evolving fast. Today’s phones don’t just understand what you're saying—they understand what you mean. Features like contextual awareness and deep integration with third-party apps make voice assistants incredibly powerful tools.

From hands-free Google searches to real-time language translation, voice-enabled smartphones are redefining how we use mobile tech.

Devices like smartwatches, fitness bands, and even AR glasses are integrating voice control. Users can track workouts, send texts, or activate emergency calls—all without navigating complex menus or even looking at a screen.

In fitness and health, this hands-free interaction is especially helpful. Whether you're running a marathon or monitoring your heart rate, voice simplifies everything.

Refrigerators, ovens, vacuum cleaners, even washing machines now come with voice control. Smart home ecosystems are being built around central voice hubs. You can now say, “Alexa, start the coffee machine,” and it’ll happen before you finish brushing your teeth.

Voice-activated homes are becoming the standard, not the exception.

NLP is the beating heart of voice tech. It allows devices to understand not just the words, but the intent behind them. This includes slang, idioms, regional phrases, and even mood detection. It's what lets your assistant know whether you're asking a question, making a command, or telling a joke.

Artificial Intelligence (AI) and Machine Learning (ML) help voice assistants learn from each interaction. The more you use them, the better they get at recognizing your voice, your habits, and your preferences. Over time, they become smarter, more responsive, and incredibly personalized.

Think of it like teaching a puppy—every command helps it learn how to serve you better.

Let’s clear this up:

Combining both allows for personalized experiences. One assistant, multiple users—each with different preferences and commands.

Security in voice banking is increasing. Users can:

All via voice. Financial institutions are catching on, realizing that convenience doesn’t have to compromise security.

Doctors are using voice to:

For patients, voice assistants offer reminders, emergency contacts, and even mental health check-ins.

Modern vehicles are practically smartphones on wheels. Voice control helps drivers:

All without taking their eyes off the road.

Let’s not sugarcoat it—always-on devices raise eyebrows. The idea that your voice assistant is constantly listening, even when “idle,” worries many users. Companies must maintain transparency about data collection and usage, and provide easy ways to mute, delete, or control recordings.

Voice tech still struggles with dialects, regional slang, and non-native accents. While companies are expanding support for more languages and localization, true global usability remains a challenge.

A poorly understood command can lead to wrong orders, sent messages, or confused responses. These hiccups frustrate users and reduce trust in the tech. Improving accuracy and contextual understanding is a constant focus for developers.

Imagine your assistant not only recognizing you but also adjusting responses based on your mood or time of day. That’s where we’re headed. Personalization will be the new standard, making interactions feel more like conversations with a friend than commands to a machine.

As the Internet of Things (IoT) expands, expect even your window blinds and garage door to listen and respond to your voice. Seamless integration across devices will allow for complete control of your environment—by voice alone.

Future voice assistants will understand why you're making a request. For example, asking “How’s the weather?” in the morning might prompt a different response than asking the same question at night. They’ll know where you are, what device you’re using, and what you’ve recently asked—all to serve you better.

Voice searches are more conversational than text. Instead of typing “pizza Milwaukee,” people now ask, “Where can I find the best pizza near me?” As a result, businesses must optimize content for natural, long-form queries and use structured data to ensure voice assistants can find and serve their content.

Designing for voice means thinking beyond screens. Developers must:

It’s a different mindset than traditional UI design, one where context, flow, and tone matter just as much as functionality.

Building a voice-activated app isn’t plug-and-play. It requires expertise in AI, voice APIs, user behavior, and cross-device syncing.

Zetaton is a leading software development company specializing in voice-activated app development. With a deep understanding of natural language interfaces, real-time data processing, and smart device integration, Zetaton delivers solutions that are secure, scalable, and seriously smart.

Whether you’re creating a voice-first fitness app, a smart home control platform, or an enterprise assistant, Zetaton provides end-to-end development services to bring your ideas to life. They don’t just build apps—they craft experiences that listen, learn, and engage.

The rise of voice-activated apps in smart devices is reshaping how we live, work, and connect with technology. It’s not a temporary trend—it’s the future of interaction.

As voice technology becomes more refined, accessible, and embedded into everyday objects, the opportunities for innovation are endless. For users, it means more convenience and freedom. For developers and businesses, it’s a call to innovate, adapt, and speak the user’s language—literally.

So if you haven’t started thinking about voice tech, now’s the time to find your voice in the digital world.

Voice-activated apps are software programs that allow users to interact with smart devices using spoken commands instead of physical input. These apps work by utilizing technologies such as speech recognition, Natural Language Processing (NLP), and artificial intelligence to interpret user intent and respond accordingly. When used in smart devices like speakers, phones, or appliances, voice-activated apps provide hands-free functionality that makes everyday tasks faster and more convenient.

Voice-activated apps are gaining popularity in smart homes and mobile devices because they offer unmatched convenience, improved accessibility, and seamless multitasking. Instead of navigating through apps or interfaces, users can simply speak to perform tasks like adjusting lighting, playing music, or sending messages. As voice technology becomes more accurate and intuitive, it continues to revolutionize the way users interact with their devices.

Security in voice-activated apps for smart devices is a top concern for both developers and users. While many apps and devices use encryption and voice ID to enhance security, risks still exist, such as accidental activations or unauthorized access. It’s important for users to regularly update their devices, review privacy settings, and use features like voice recognition to ensure their voice-enabled experiences remain secure.

Voice-activated apps offer significant benefits for users with disabilities or limited mobility by enabling hands-free control of smart devices. These apps make everyday tasks—like sending messages, making calls, or adjusting settings—more accessible to individuals who may find traditional interfaces challenging. This increased accessibility empowers users with greater independence and ease in navigating their digital environments.

Yes, businesses are increasingly leveraging voice-activated apps to improve customer experience and streamline operations. Voice technology can power virtual assistants, automate customer service, enable voice search for products, and even process transactions. By integrating voice-activated apps, companies can offer faster, more intuitive interactions that align with modern consumer expectations.